Improved GDP nowcasting using large datasets

There is a wealth of economic data available to forecast GDP growth in real time (more than a thousand in this study). To make the most of this wealth, "modern" methods, some of which are based on artificial intelligence, provide an interesting perspective. For example, random forests and dynamic factor models are more efficient at predicting GDP at the beginning of a quarter than some traditional models.

Business outlook surveys, economic data, and financial time series provide a considerable amount of information available to forecasters for GDP nowcasting, namely to predict economic growth in the very short term . Business surveys generate not only synthetic indicators, but also disaggregated subsector survey data, containing much supplementary information in over a thousand time series that can be harnessed in an attempt to improve short-term forecasts.

Conventional forecasting methods are ill-suited to processing such large datasets, and most GDP nowcasting remains based on linear regression on a relatively small number of variables. The past two decades, however, have seen the development of statistical methods capable of manipulating far larger datasets. One example is found in dynamic factor models, which can be used to summarise information efficiently while requiring only limited computing resources.

More recently, as computing power has increased, machine learning methods have been developed and have gained popularity. These methods apply new techniques for filtering, sorting and processing information, such as random forests and neural networks.

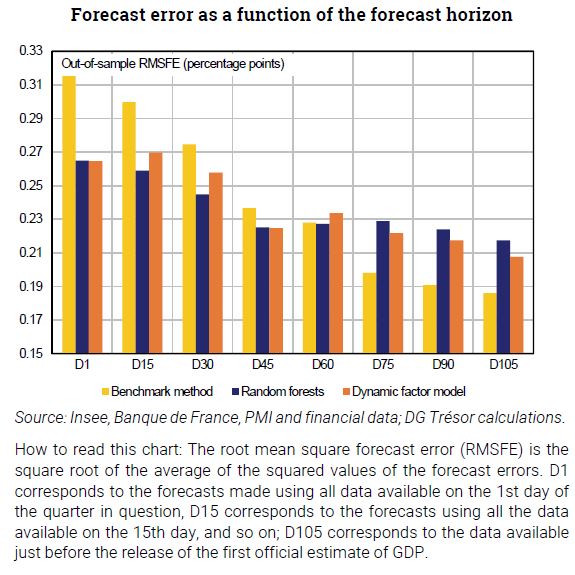

Some of these methods can improve short-term GDP growth forecasting performance by using large databases that include, among other things, subsector-level survey data chosen through a variable preselection process. Random forests appear to offer an appropriate method for selecting, at different dates, the variables most likely to provide information on current GDP.

The greatest gains in GDP nowcasting performance arising from models based on large datasets over conventional models occurs in the earlier part of the quarter, before the first "hard" (quantitative) data becomes available.